OK, we get it. There is (still) no solid evidence that intranasally administrated oxytocin gets into the brain. Many studies used flawed techniques to measure oxytocin in the blood. The seminal works that inspired human oxytocin research do not replicate well. Still, there are so many reports of relationships between oxytocin and social behaviors. It is impossible that not a single one of these effects is real. Isn’t it?

It is possible that some effects are real. But, in a world where only 36% of psychological experiments replicate, and prominent newspapers are easily fooled into making catchy headlines out of flawed studies published in obscure journals – it is a possibility we should seriously consider. And here’s why.

In one of my favorite psychological studies, Shaul Shalvi and colleagues investigated the drivers of dishonest behavior. Subjects had a single opportunity to privately roll a 6-sided die and then report the outcome to the experimenter. Subjects had been instructed that they would get paid, in cash, based on their reports: for example, if one reported “5”, she or he would be paid $5. This gave subjects financial incentives to lie and report high numbers, regardless of the true outcomes.

Some subjects indeed lied, but the distribution of reported outcomes showed that only a small fraction of them did. Despite the financial incentive, and even without a chance of getting caught, subjects reported high outcomes only slightly more often than low outcomes.

But it only took a slight change in the instructions to turn many subjects into liars. In a follow up study, subjects were instructed to roll the die three times – and only report the first outcome. The frequency of “high outcomes” was strikingly greater this time. A simple analysis revealed that subjects reported the highest of the three throws, instead of reporting the first outcome. Giving people a self-justification opportunity (they did see a high outcome after all, right?) made it psychologically easier to break the rules.

When scientists run a study that examines a false hypothesis and use a statistical test to evaluate its outcomes, what they do is statistically equivalent to rolling a 20-sided die (D&D, anyone?). The norm is that scientists must predict (before rolling the die) what the outcome would be (for example, “20”) and report a positive finding only if this was indeed what they had found. This practice is supposed to assures that only 5% of the false results will be reported.

Glimpsing through the scientific literature in most fields (oxytocin research is no different), one can easily tell that most of the reports are of positive discoveries. This reflects a well-known publication bias: scientists selectively report only positive findings – that are more likely to get published in top journals– and store their negative findings in their file drawers. This means that the number of positive reports does not depend only on the true state of the world, but also on the overall number of studies that are conducted. If researchers roll the 20-sided dice many times, selectively report the “20”s, but store all of the “1”s, “2s” and the rest of the outcomes deep in their file drawers, we are assured to get many positive reports of false findings.

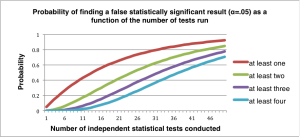

How bad is this problem? Depends on how many studies are conducted. If enough studies are carried out, every hypothesis will eventually be supported by some reports of experimental “evidence”. Ironically, the more trendy a theory becomes – the more likely researchers will test it, and the field as a whole will find false effects just by mere chance. The chart below shows that the probability of finding at least one “statistically significant” effect (the red line) grows fast as a function of the number of studies conducted (the exact probability is 1 – (1-α)N, where α=.05). The chances of finding at least two, three and four results follow similar patterns.

This problem is enhanced in oxytocin research. The experimental procedure typically includes either blood draws or pharmacological treatments, and in such cases, there’s an ethical justification to maximize the knowledge gained from each subject. Therefore, it is the norm to run several tasks, some of them measure more than a single behavioral outcome. More data is better, but that depends of how it is being used. In practice, testing multiple hypothesis using statistical tests that were designed for a single study, greatly increases the of probability of finding a false association. But wait, there’s more.

Hormonal systems are complex to study, and few simple cause and effect relationships exist in nature. Therefore, different subjects might respond differently to pharmacological treatments. Intranasal oxytocin administration might make males more trusting– but have an opposite effect on females. The spray might not work when interacting with someone that seems unreliable. Or perhaps one must be betrayed first before the nose-spray exhibits its trust-enhancing effects.

Back in 2011, a well-cited review (over 500 citations in 4 years!) pointed out that only half of oxytocin administration studies had reported main effects of the treatment. The rest only found effects in sub-populations of the subject pool, or under specific environmental conditions. The authors suggested that the field could benefit from exploration of these factors. Behind this well-intentioned proposition hides a potential disaster.

Mining data for associations without an explicit hypothesis, in search of true predictive power, is not straightforward. An entire discipline in computer science is dedicated to dealing with such problems. But in practice, oxytocin researchers responded to the review’s proposition by collecting many variables that could potentially moderate the effects of oxytocin (sex, age, health status, relationship status, personality, genetics, environmental conditions and more), post-hoc selected the moderators that their interactions with oxytocin treatment were “statistically significant” and reported them as positive results – using statistical tests that were designed to keep the false discovery rate of a single hypothesis test low.

At the absence of an explicit guiding theory, every variable was a potential suspect. But here’s the catch: each test of whether oxytocin works only under specific conditions is statistically equivalent to rolling the 20-sided die again. With the collection of more and more variables, it becomes hard not to find a false effect by mere chance.

Suppose one only collects the big five personality traits (which are considered statistically independent of each other) and gender, to test whether these factors moderate the effects of oxytocin on one target behavior. By doing so, he or she actually rolls the die 18(!) times: when testing for a main effect of oxytocin (one), testing for effects in each gender (two more), testing for personality dependent effects (5 more) and finally testing for every possible gender-personality interaction (10 tests). As the chart above has shown, if one uses the standard procedure of statistical testing, that was designed to test a single hypothesis, the chance of a false discovery has already reached 60%. When running a battery of three tasks for every subject who underwent oxytocin treatment – we end up rolling the dice 54(!) times and finding false effects becomes almost guaranteed – over 90%. The chance of finding at least four different effects is over 50%. Given these numbers, it is not surprising that interaction effect studies in social psychology are less likely to replicate (22% success) even compared to the (already low) replicability rates in the rest of the field.

With promising initial findings, extreme media hype and nearly guaranteed chances of finding significant results that end up in top academic journals, researchers had strong incentives to jump on the intranasal oxytocin bandwagon and speed up the accumulation of the literature. Ironically, this method is also cheaper than studying other hormones: because one cannot verify whether the substance really gets into the subjects’ brains, there was no justification to invest in neither collecting saliva or blood samples nor performing any type of expensive hormonal assays for the sake of a manipulation check.

Researchers also had several reasons that justified keeping doing what they were doing. There is an ethical rationale to make the best effort to publish results that were obtained from human subjects that underwent a pharmacological treatment; we still don’t know much about the time course and the exact administration procedures required to make the spray “work” well, so it was easy to justify why only some of the studies worked as predicted; running many tests and only reporting the positive results might have been the norm in other fields. Last but not least, most of the results made sense on hindsight, even if there were great chances that they were just statistical artifacts.

The future will tell whether my conspiracy theory reflects what was really going on in oxytocin research. Over the past few months I have come to believe that the likelihood has increased. A recent meta-analysis co-authored by Larry Young, the scientist who made some of the most important discoveries relating oxytocin with social behavior in animals – estimated that the average statistical power (the chances of finding true effects if these truly exist) in oxytocin research is between 12% and 16%. This means that even if all of the effects were real, the studies investigating them did not use enough subjects to meaningfully study them.

This leaves us in a difficult place: in order to reliably extract true signals from the literature, we should directly replicate all studies using much larger samples – at the order of hundreds, sometimes thousands of subjects. Given that we are unsure whether intranasal oxytocin gets into the brain in the first place and have no means to conduct a manipulation check, it is not obvious that pursing this direction is worth scientists’ time, effort and money.

Pingback: Testing the null hypothesis of oxytocin’s effects in humans #SurfaceYourRealSelf | Surface Your Real Self

Great post, Gidi. Only issue that I had is your mention of ‘mediators’.. I think you actually mean ‘moderators’ – factors that influence the extent to which OT influences a particular end-point.

Thanks Justin, you are right 🙂

Pingback: Opening the oxytocin file drawer, one lab at a time – The neuroeconomist

Pingback: Everything you’ve heard about sniffing oxytocin might be wrong - Home Family Web

I will also like to convey that most of those who find themselves without the need of health insurance are normally students, self-employed and people who are without a job. More than half from the uninsured are under the age of Thirty-five. They do not feel they are needing health insurance since they are young as well as healthy. Its income is normally spent on real estate, food, plus entertainment. Many individuals that do represent the working class either whole or part time are not made available insurance by way of their work so they move without owing to the rising tariff of health insurance in the United States. Thanks for the ideas you share through this blog.

Hello, Neat post. There’s a problem together with your web site in web explorer, might test this…

IE nonetheless is the marketplace chief and a good component to other people will miss your excellent writing due to

this problem.

Hi, Neat post. There’s an issue along with your web site in web

explorer, might test this… IE still is the market leader and a huge component to people will leave out your magnificent writing due to this problem.